If you spend any time online, chances are you’ve heard of Giving Tuesday, a phenomenon that emerged out of a non-profit organization’s effort to counterbalance the consumer-oriented post-Thanksgiving splurges of “Black Friday” and “Cyber Monday” with a day devoted to generosity toward others. Giving Tuesday is but one example of what can be accomplished by eliciting the charitable impulse that is inherent in the human person.

The idea for dedicating the Tuesday after Thanksgiving to charitable giving was born just nine years ago, but it has already achieved massive recognition. As of 2015, a survey found that only 18 percent of Americans were aware of it, but that number is surely much higher now. The totals for 2020 have not yet been finalized, but more than $500 million was given in 2019 and predictions see 2020 exceeding that mark. Corporations have bolstered the effort by promoting it in various ways: most prominently, Facebook matches donations made through its platform.

Giving Tuesday is but one small piece of a worldwide philanthropic movement that is gigantic in both scale and effect. In the United States alone, donors contribute more than $400 billion per year to non-profit organizations. Removing corporations and foundations from the mix still leave more than $2 billion given by individuals. Skeptics may rightly point out that the US tax code incentivizes such giving, but the fact is that most taxpayers take standard deductions and thus are not rewarded for their donations. In addition, these charitable totals do not reflect in-kind gifts or volunteer hours that are not tracked as charitable giving. If these were added, the total (non-tax-related) contributions would doubtless balloon well into the billions.

In other words, it is common for people to help other people, and money is only one way it happens. This helpfulness is obvious whenever a high-profile natural disaster hits: volunteers and donations come pouring in from around the world. But it also happens constantly in ways that rarely receive publicity: helping a struggling student with school; caring for a child or an elderly person; giving someone a ride to the store or to church; assisting a neighbor with yard work; offering a meal to someone in need.

In other words, it is common for people to help other people, and money is only one way it happens. This helpfulness is obvious whenever a high-profile natural disaster hits: volunteers and donations come pouring in from around the world. But it also happens constantly in ways that rarely receive publicity: helping a struggling student with school; caring for a child or an elderly person; giving someone a ride to the store or to church; assisting a neighbor with yard work; offering a meal to someone in need.

In these and countless other ways, we demonstrate solidarity, which recent popes have defined as “a sense of responsibility on the part of everyone with regard to everyone.” It is natural to feel and exhibit concern for those in our immediate sphere, our friends and family. And it is true that we have a primary obligation to care for those nearest to us. But the principle of solidarity urges us to look beyond those with whom we interact regularly and to consider the needs of a wider world. Without denying diversity, solidarity thus points to what is common in humankind. It stresses the unity of human persons as sons and daughters of one Father. When we give—monetarily or otherwise—to charitable causes within or beyond our local communities, we express and affirm this unity. We recognize the reciprocal obligations we have to each other as fellow creatures of a benevolent Creator.

The non-profit world is vast and various, and there are surely some causes and organizations with which we would disagree, perhaps strongly. But Giving Tuesday nonetheless represents a positive impulse, one that we do well to encourage. Going outside of ourselves is a healthy practice, for selflessness breeds self-content. This venerable spiritual paradox is conveyed in the words of the Prayer of St. Francis: “It is in giving that we receive.” As a devout Christian, St. Francis subscribed to an even more profound truth that gives further impetus to our generosity. “If anyone has material possessions and sees a brother or sister in need but has no pity on them, how can the love of God be in that person?” (1 John 3: 17). The things of this world are passing; let us use them, while we may, to build up the Kingdom.

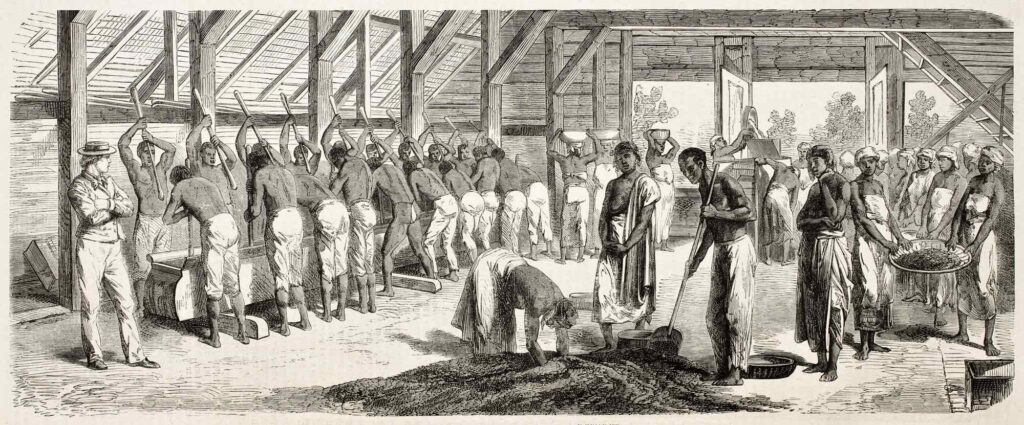

From the Liberty Bell to the slogan “Give me liberty or give me death,” the language and symbolism of liberty have permeated American political culture since its inception. Until recent decades, social movements strove to attach themselves to that tradition, because identification with freedom virtually ensured success. “Free at last! Free at last! Thank God almighty we are free at last!” were the unforgettable final words of Martin Luther King Jr.’s momentous “I Have a Dream Speech.” No one—left, right, or center—wanted to be associated with oppression or infringement of liberty.

From the Liberty Bell to the slogan “Give me liberty or give me death,” the language and symbolism of liberty have permeated American political culture since its inception. Until recent decades, social movements strove to attach themselves to that tradition, because identification with freedom virtually ensured success. “Free at last! Free at last! Thank God almighty we are free at last!” were the unforgettable final words of Martin Luther King Jr.’s momentous “I Have a Dream Speech.” No one—left, right, or center—wanted to be associated with oppression or infringement of liberty. Consider a city council that has a million dollars to spend. Options before them are building a new park or replacing a failing bridge. Each project costs a million dollars. They can’t do both. If they choose the park, some will complain that they are endangering residents by neglecting the bridge. If they choose the bridge, some will complain that they are undermining the welfare of children by not providing a safe place to play. The council members can study both projects carefully and fully appreciate the

Consider a city council that has a million dollars to spend. Options before them are building a new park or replacing a failing bridge. Each project costs a million dollars. They can’t do both. If they choose the park, some will complain that they are endangering residents by neglecting the bridge. If they choose the bridge, some will complain that they are undermining the welfare of children by not providing a safe place to play. The council members can study both projects carefully and fully appreciate the  We need to remind ourselves that it’s the people involved in day-to-day work, not politicians and entertainers, who really make the world go round. Sometimes, we’re forced to remember: for example, when COVID knocks out workers at a few meat processing plants and the price of beef skyrockets. The people putting food on our table, keeping our utilities working, building our homes, and feeding, clothing, and teaching the next generation are living “quiet and useful” lives. They are not the kinds of lives that attract book or movie deals, but they are indeed very useful.

We need to remind ourselves that it’s the people involved in day-to-day work, not politicians and entertainers, who really make the world go round. Sometimes, we’re forced to remember: for example, when COVID knocks out workers at a few meat processing plants and the price of beef skyrockets. The people putting food on our table, keeping our utilities working, building our homes, and feeding, clothing, and teaching the next generation are living “quiet and useful” lives. They are not the kinds of lives that attract book or movie deals, but they are indeed very useful.

Although such efforts are often couched in the rhetoric of justice for a deprived people and rationalized as a desire to prop up those whose starting line was way behind, they only accomplish the severing of the connection between reward and accomplishment that can only lead to success through struggle in spite of obstacles. Offering brownie points to “endangered communities” is not much more than degrading and condescending paternalism, however well-intentioned.

Although such efforts are often couched in the rhetoric of justice for a deprived people and rationalized as a desire to prop up those whose starting line was way behind, they only accomplish the severing of the connection between reward and accomplishment that can only lead to success through struggle in spite of obstacles. Offering brownie points to “endangered communities” is not much more than degrading and condescending paternalism, however well-intentioned.